LLMs + Structured Output as a Translation Service

Other languages: Español Français Deutsch 日本語 한국어 Português 中文

In an earlier post dedicated to localizing an app with AI, we discussed automating the translation of UI strings. For that task, I chose a translation tool over an LLM, based on the following rationale:

…I was planning to use OpenAI’s GPT3.5 Turbo, but since it is not strictly a translation model, it requires extra effort to configure the prompt. Also, its results tend to be less stable, so I chose a dedicated translation service that first sprung to mind…

Later that year, several LLM providers introduced the structured output feature, making LLMs significantly more suitable for the discussed use-case. Today, we’ll take a more detailed look at the problem and how this feature solves it.

The problem

LLMs are inherently non-deterministic, which means they might produce different outputs for the same input. This randomness makes LLMs “creative”, but it also reduces their reliability when it is required to follow a specific output format.

Consider the translation use-case as an example. A naïve solution would be to describe the required format in the prompt:

> Translate 'cat' to Dutch. ONLY GIVE A TRANSLATION AND NOTHING ELSE.This works, although occasionally, an LLM will get the instructions wrong:

> Certainly, allow me to provide the translation for you.

The word 'cat' is translated as 'kat' in Dutch. Sometimes, female cats may also be referred to as 'poes'.Since the application expects a single word as a response, anything other than a single word might lead to errors or corrupted data. Even with additional checks and retries, such inconsistencies compromise the reliability of the workflow.

What is structured output

Structured output addresses the issues with inconsistent formatting by letting you define a schema for the response. For example, you can request a structure like this:

{

"word": "cat",

"target_locale": "nl",

"translation_1": "kat",

"translation_2": "poes"

}Under the hood, the implementation of structured output can vary between models. Сommon approaches include:

- using a finite state machine to track the transitions between tokens and prune generation paths that violate the provided schema

- modifying the probabilities of the candidate tokens to decrease the likelihood of selecting non-compliant options

- fine-tuning the model with a dataset focused on recognizing JSON schemas and other structured data formats

These additional mechanisms help achieve higher reliability compared to simply adding format instructions to the prompt.

Code

Let’s update the translation script. To start, we’ll define the object that represents the schema:

response_format={

"type": "json_schema",

"json_schema": {

"name": "translation_service",

"schema": {

"type": "object",

"required": [

"word",

"translation"

],

"properties": {

"word": {

"type": "string",

"description": "The word that needs to be translated."

},

"translation": {

"type": "string",

"description": "The translation of the word in the target language."

}

},

"additionalProperties": False

},

"strict": True

}

}Next, we can use the schema object in the function that performs the request:

def translate_property_llm(value, target_lang):

headers = {

'Content-Type': 'application/json',

'Authorization': f'Bearer {openai_key}',

}

url = 'https://api.openai.com/v1/chat/completions'

data = {

'model': 'gpt-4o-mini',

'response_format': response_format,

'messages': [

{'role': 'system',

'content': f'Translate "{value}" to {target_lang}. '

f'Only provide the translation without any additional text or explanation.'},

{'role': 'user', 'content': value}

],

}

response = requests.post(url, headers=headers, data=json.dumps(data))

try:

content_json = json.loads(response.json()["choices"][0]["message"]["content"])

return content_json["translation"]

except (json.JSONDecodeError, KeyError) as e:

raise ValueError(f"Failed to parse translation response: {str(e)}")That’s it for coding! For more context, you’re welcome to explore and experiment with the project in the GitHub repository.

Comparison with the previous approach

The translation process took a little longer for the updated version compared to the translation service-based one. That said, the provided code is the simplest working example, and we could make it much faster by sending the requests asynchronously.

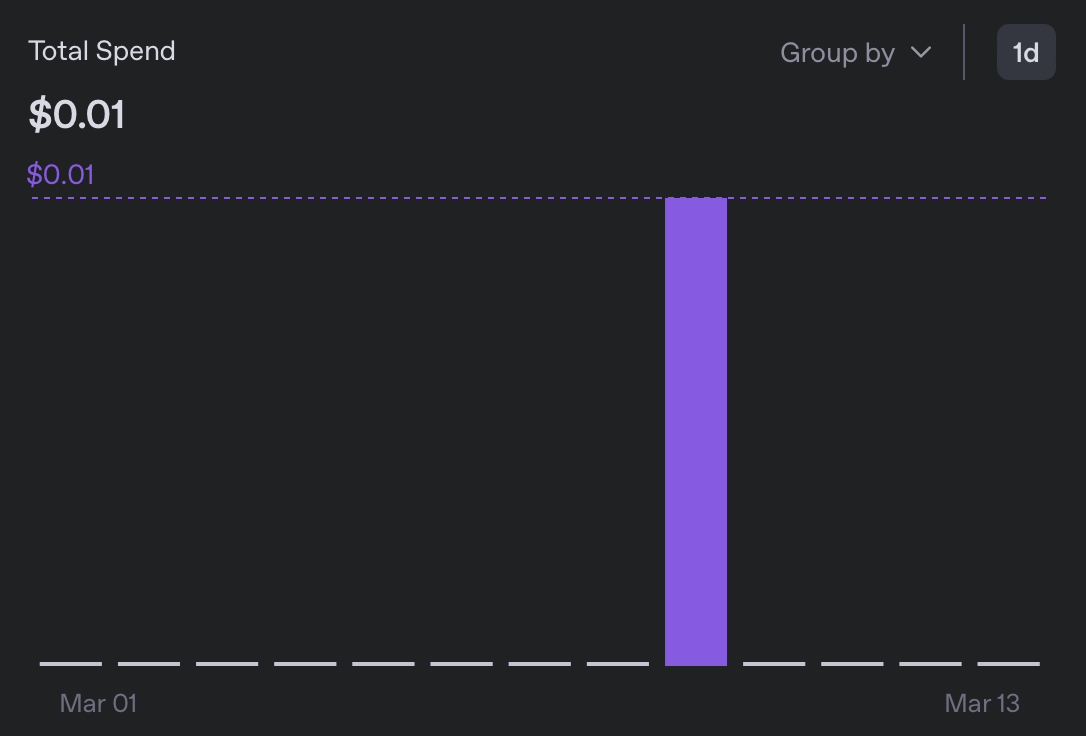

In terms of expenses, translating to 5 locales cost me less that 0.01$, which is more than 10 times cheaper than the previous version:

Skimming through the resulting message bundles, I didn’t notice anything suspicious. While I can’t read any of the newly added languages, the formatting looks good, and I didn’t come across any occurrences of ‘Sure, I’ll translate that for you’🙂

Why is it important

Although the described experiment addresses a niche problem, it highlights a broader and more significant aspect of large language models. While they may or may not outperform specialized services in solving a particular task, what is truly remarkable about LLMs is their ability to serve as a quick and practical solution and a general-purpose alternative to more specialized tools.

As someone with only a basic familiarity with machine learning, I used to avoid entire classes of tasks, because even a mediocre solution required expertise I didn’t have. Now, however, it has become incredibly easy to cobble together classifiers, translation services, LLMs-as-a-judge, and countless other applications.

Of course, quality is still a consideration, but realistically, we don’t need 100% perfection for many problems anyway. One less reason not to hack!

Conclusion

I couldn’t think of a meaningful way to wrap up this article, so here is a photo of the cat I met today (not AI-generated!):